Finding Deleted Webpages

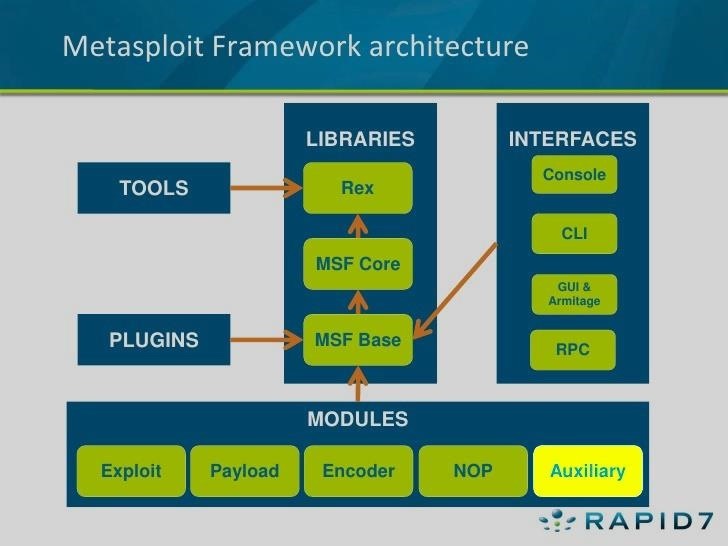

Throughout this series on Metasploit, and in most of my hacking tutorials here on Null Byte that use Metasploit (there are many; type "metasploit" into the search bar and you will find dozens), I have focused primarily on just two types of modules: exploits and payloads. Remember, Metasploit has six types of modules:

Exploit

Payload

Auxiliary

NOP (no operation)

Post (post exploitation)

Encoder

Although most hackers and pentesters focus on the exploits and payloads, there is significant capability in the auxiliary modules that is often overlooked and ignored. About one-third of all of Metasploit—measured by lines of code—is auxiliary modules. These auxiliary modules encompass the capabilities of many other tools that a hacker requires, and include various types of scanners (including Nmap), denial-of-service modules, fake servers for capturing (admin) credentials, fuzzers, and many more.

In this tutorial, I want to explore and illuminate one of the auxiliary modules that can make hacking with Metasploit much more effective and efficient.

Step 1List the Modules

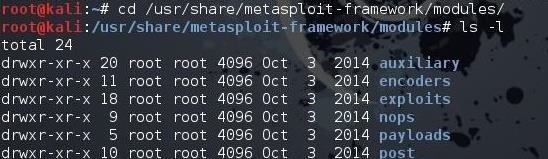

First, let's fire up Kali and open a terminal. In Kali, Metasploit modules are stored at:

/usr/share/metasploit-framework/modules

Let's navigate there:

kali > cd /usr/share/metasploit-framework/modules/

Then, let's list the contents of that directory:

kali > ls -l

As you can see, there are six types of modules in Metasploit, as mentioned before. Let's focus our attention on the auxiliary modules.

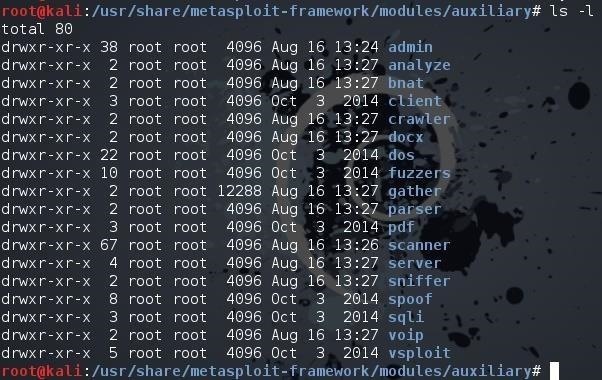

Step 2List the Auxiliary Modules

First, navigate to the auxiliary module directory and list its contents:

kali > cd auxiliary

kali > ls -l

As you can see, there are numerous subdirectories of auxiliary modules. In an earlier tutorial, I pointed out that there are hundreds of auxiliary modules for DoSing in Metasploit. In this tutorial, we want to work with the scanner modules. Metasploit has scanning modules of just about every type, including Nmap and website vulnerability scanning.

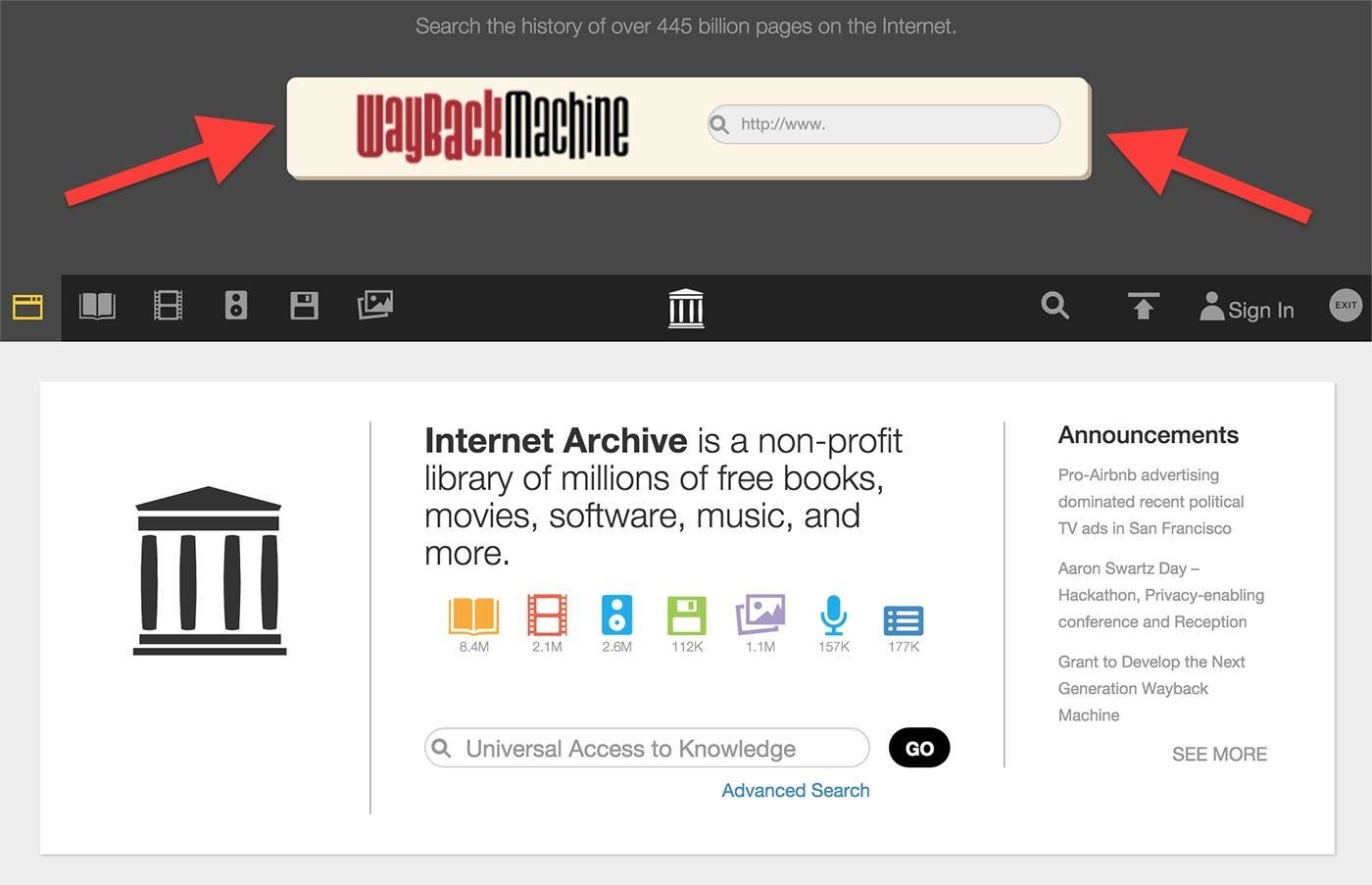

Step 3The Wayback Machine

As many of you know, recent years have seen an increased emphasis on information security. Webmasters and IT security personnel are more vigilant about what goes onto their website, trying to make certain that information that might be used to compromise their security is not posted.

If we go back just a few years, that was not the case. Companies often would post email addresses, passwords, vulnerability scans, network diagrams, etc. on their website, not aware that someone might find these and use them for malicious purposes. Although I still occassional find a company listing email addresses and passwords on their website, this has become much less common.

Fortunately for us, nothing ever disappears from the web. If it's here today, it will be here 20 years from now (keep that in mind when posting on Facebook or other social media sites).

The Internet Archive (Archive.org) was established to save free books, movies, music, software, along with all of the old web information via its Wayback Machinetool (which is a reference to an old cartoon where the main characters, Mr. Peabody and Sherman, would travel back in time in a time machine they called the "WABAC Machine"). This means that if a company had, at one time, stored email addresses and passwords on their webpages, or Nessus scans, it is still around somewhere on Archive.org.

Step 4Use Metasploit to Retrieve Deleted Webpages

Fortunately for us, Metasploit has an auxiliary module that is capable of retrieving all of the old URLs from Archive.org that are stored for a particular domain. Since the Internet Archive's website tool is referred to as the Wayback Machine, Metasploit has a module called enum_wayback, short for "enumerate wayback" machine.

Let's start the Metasploit console and load it:

kali > msfconsole

When the msfconsole opens, let's load the wayback module by typing:

msf > use auxiliary/scanner/http/enum_wayback

This module basically has just two parameters to set:

The domain we want to search for on archive.org

The file we want to save the information in

Since we will be using the SANS.org as our target, let's set the output to a file named sans_wayback:

msf > set OUTFILE sans_wayback

Next, let's set the domain to our favorite IT Security domain:

msf > set DOMAIN sans.org

Step 5Start the Wayback Machine

Unlike exploits in Metasploit where we type exploit to start them, auxiliary modules are initiated by typing run instead:

msf > run

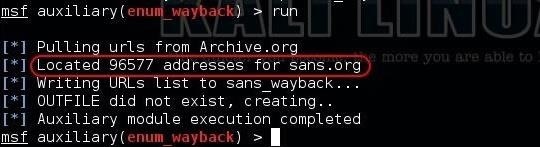

This module will now go to Archive.org and begin to retrieve every saved URL of SANS.org over the years. In the case of SANS.org, it is over 96,000 URLs! Since we told this module to store all of the URLs in a file named "sans_wayback," all these URLs are written to this file.

When the module is done running, we can look inside this file by typing:

kali > more sans_wayback

When we do, we can see that Archive.org has stored the first URL from 1998. With over 90,000 URLs, visual inspection looking for interesting information is not really practical. Fortunately, god gave us the grep command.

If we want to find the URLs with emails stored in them, we can type:

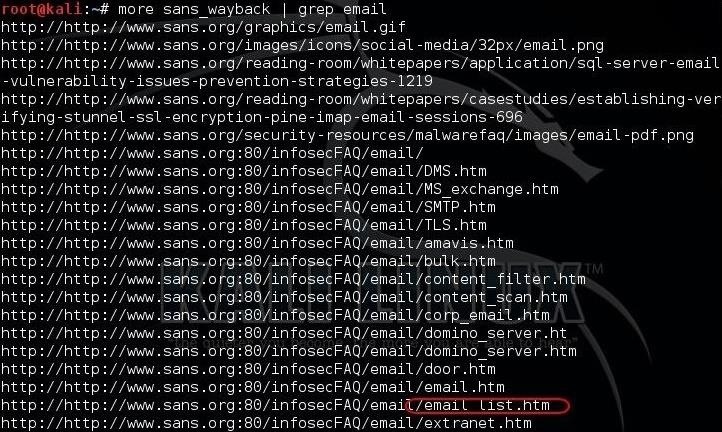

kali > more sans_wayback | grep email

As you can see in the screenshot above, there are quite a few URLs pertaining to email. The one I have circled in red looks particularly interesting, email_list.htm.

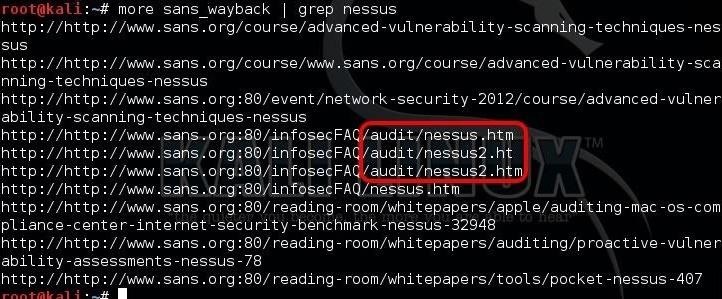

It is not unheard of for companies to put their vulnerability scans on their website for the security and network personnel to view (and the rest of us). As Nessus is the world's most widely used vulnerability scanner, let's see whether we can find it among these old webpages.

kali > more sans_wayback | grep nessus

As you can see above, we have found three webpages with Nessus in them in the "audit" directory. Hmmm... that might be interesting... maybe some Nessus scan reports from the past?

This approach to finding deleted information is limited to the information in the URL. We could actually take these URLs and view them in our browser at Archive.org to see whether we can find text information on the page that might be useful. Maybe a better approach would be to download the interesting URLs that this module enumerated directly to a hard drive using HTTrack, then do a text search on the entire web content.

No comments:

Post a Comment